Today I will try creation of a Kubernetes cluster but with Windows as nodes instead of Linux. Obviously Master will always be Linux. This time I will follow Deploy Kubernetes cluster for Windows containers step by step and then play with the newly created cluster. I advise you to read Part 1 before continuing.

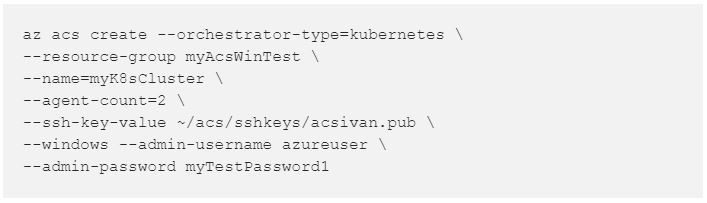

Create Kubernetes Windows Cluster

Let’s start with the usual creation of a resource group for this test so that we can easily group all cloud artifacts in it and delete everything on the fly at the end. ARM has been a great addition to Microsoft Azure.

- Create dedicated Resource group:

az group create --name myAcsWinTest --location westeurope - Create Kubernetes cluster (here I will use my ssh key pair created in Part 1):

- Connect to Kubernetes cluser:

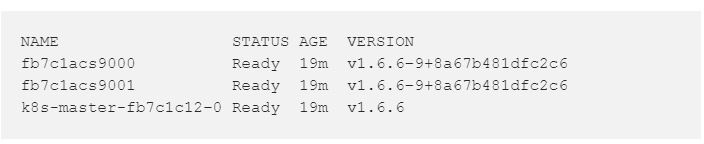

az acs kubernetes get-credentials --resource-group=myAcsWinTest --name=myK8sCluster --ssh-key-file ~/acs/sshkeys/acsivan - Check connection retrieving nodes list:

kubectl get nodes

Cluster up and running! Awesome!

Before proceeding, open Kubernetes Dashboard to check what’s going on in the cluster using a browser.

- Connect to Kubernetes Dashboard:

- Open a browser pointing at http://127.0.0.1:8001/ui leave it open so that you can check there, changes applied later.

Play with Kubernetes Windows Cluster

Let’s start with the easy sample of IIS using windowsservercore instead of nanocore as described in Microsoft article. I will follow same steps to introduce concepts like pods and way to expose it once manually deployed.

Note: I consider this bad because you end up with a pod, a service, but no controller between them as a Replica Set or a Deployment managing it. Later we will see how to “fix” this issue without downtime.

- Deploy an IIS windowsservercore container

Create iis.json with following content:

We can now apply configuration to a new Pod named iis: kubectl apply -f iis.json

- Check running Pod:

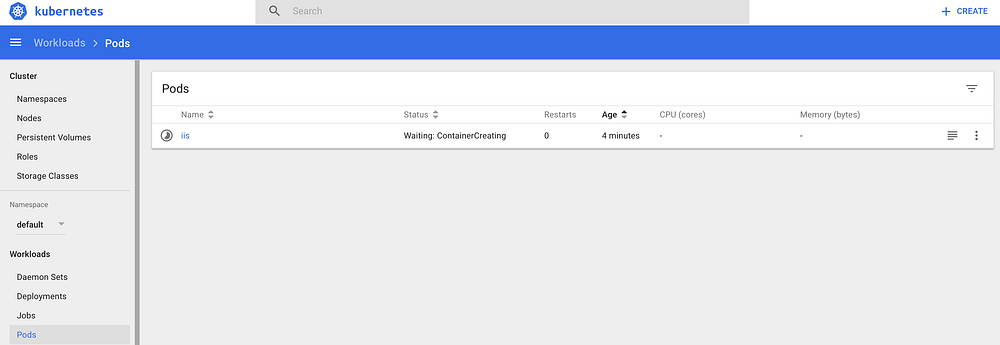

kubectl get pods --watchIt will take around 10 mins to be created, because windowsservercore image is quite big ~5GB. To gain time you can expose this pod right now, there is no need to wait for pod creation in this test. LoadBalancer creation will start immediately. - Open Kubernetes Dashboard at Pods section and you will see something like:

- Expose Pod with a service:

kubectl expose pods iis --port=80 --type=LoadBalancer(In a future post I will try Ingress in an hybrid cluster, let’s push Kubernetes to its limits!). - Wait for LoadBalancer activation with:

kubectl get svc --watchor check manually with Kubernetes Dashboard.

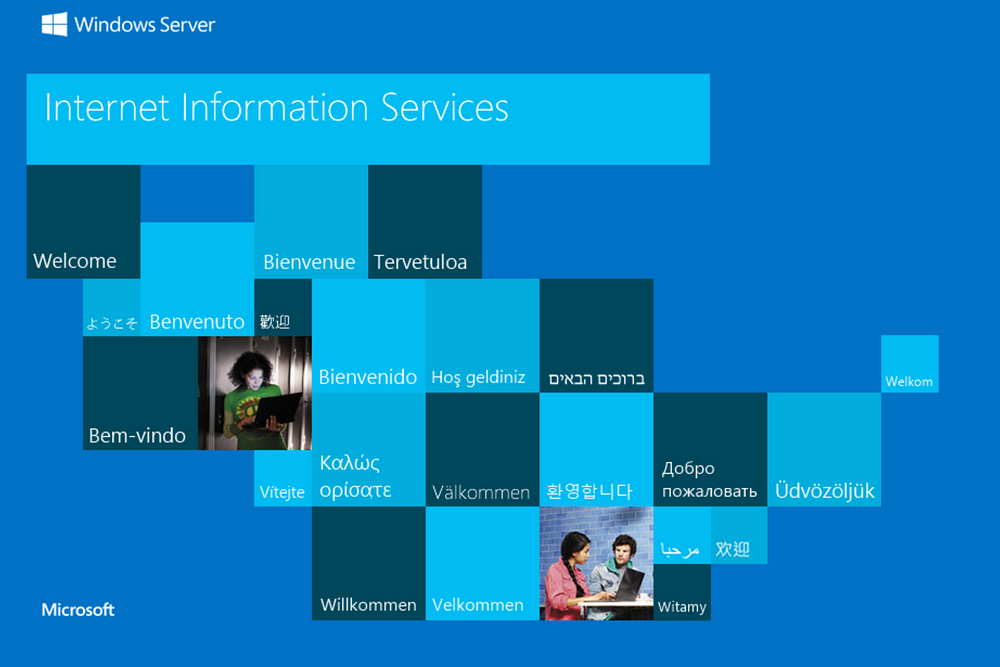

When you have IP you can try to browse there and you should see:

If I had a pod to scale I would _________?

Now let’s try to scale out our iis pod. Wait… We can’t scale a pod directly. We need a ReplicaSet or a Deployment for this. How would you handle this?

Here I use a trick I found during my research that let you scale your pods without any downtime for final users. Probably there is a better way to do it, if so please add it to the comment.

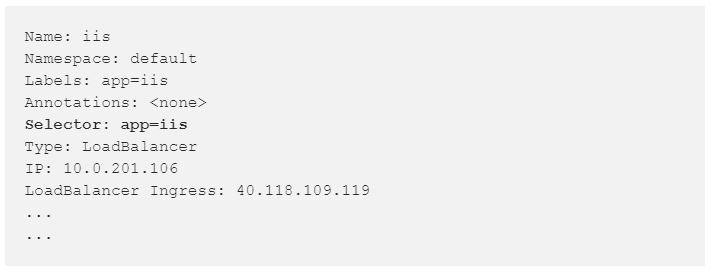

Brief Explanation needed

- Run

kubectl describe service iisand you can see that service has a selector equal to “app=iis”. This means that any pod with this label will be exposed using this LoadBalancer

The idea here is to create a new deployment that will create 2 new replica of our iis pod using the same label.

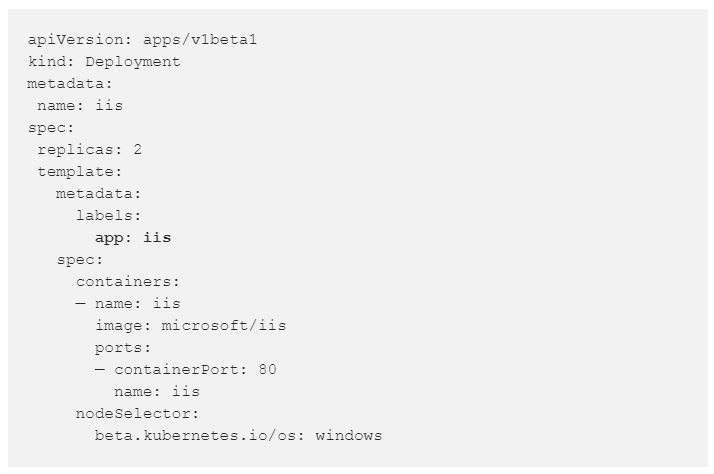

- Create a file called iisdeployment.yaml with following content:

- Create deployment with:

kubectl create -f iisdeployment.yaml - Run

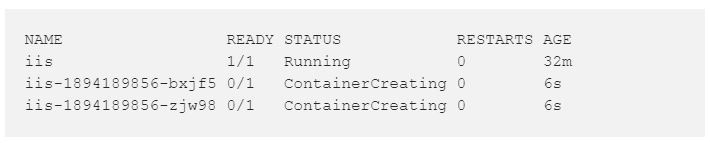

kubectl get podsnow and you can see 1 running pod and 2 additional ones being created:

Note: during these steps, you can check your service using a browser and see that is up and running without problems.

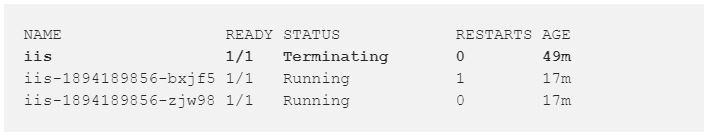

- You can now delete original pod without any downtime for final users running:

kubectl delete pods iis

Scale up to 4 replicas!

- This is easy now:

kubectl scale deployments/iis replicas 4

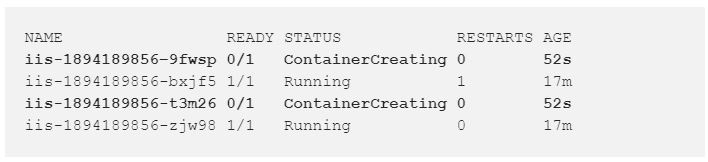

What if we have a problem with a pod iis-1894189856–9fwsp and we want to isolate it from the rest while keeping it running for debugging purposes?

- I do this running:

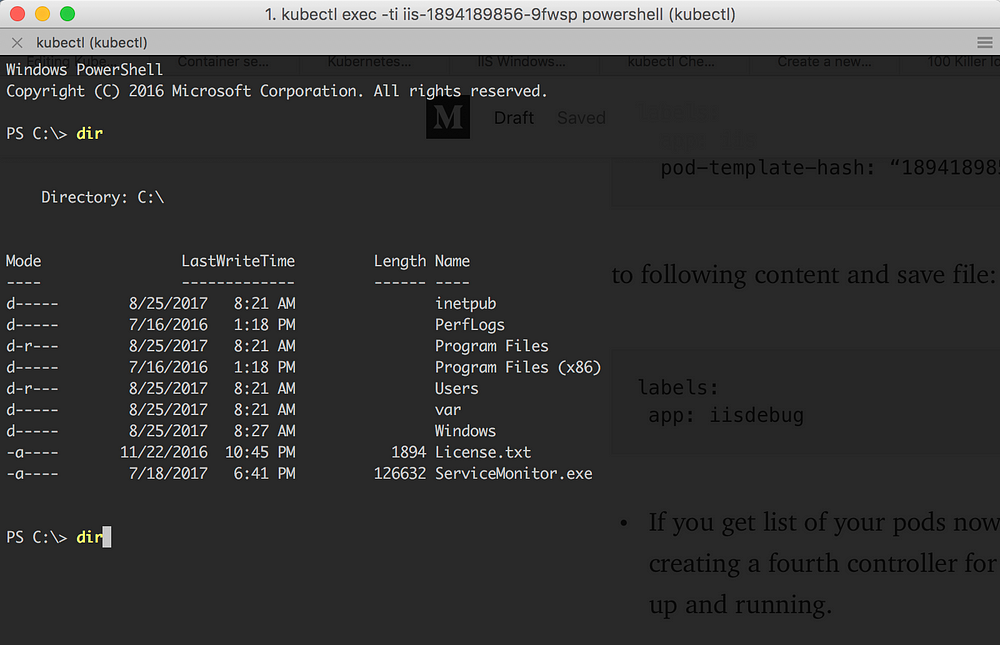

kubectl edit pods iis-1894189856-9fwsp - File opens in your predefined editor

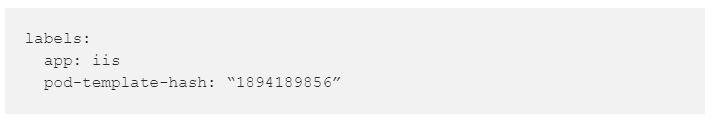

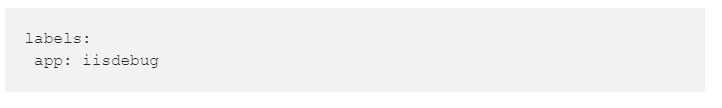

- Change labels section from:

to the following content and save file:

- If you get the list of your pods now, you will see that Kubernetes is already creating a fourth controller for iis deployments and your pod is still there up and running.

How do I connect to a running Windows Server Core container?

- You can now debug this container being sure that is not exposed externally because our iis service is using app:iis as selector.

- Connect to it using:

kubectl exec -ti iis-1894189856-9fwsp powershell

- From your computer you can now easily run any PowerShell command in your running image in the cloud!

- Remember to delete everything when you have finished playing with your cluster with:

In Part 3 I will try to create an hybrid cluster with Windows ad Linux nodes!

Do you want more information about Kubernetes on Azure? Contact us!